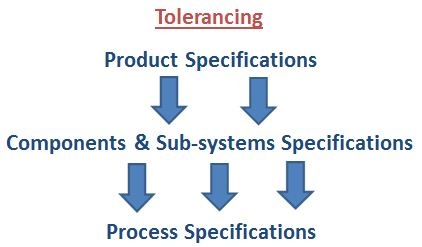

Suppose that you have designed a brand new product with many improved features that well help create a much better customer experience. Now you must ensure that it is manufactured according to the best quality and reliability standards, so that it gets the excellent long-term reputation it deserves from potential customers. You need to move quickly and seamlessly from Research and Development into mass production. To scale up production, the design team needs to provide the right components specifications to the suppliers, and these components specifications will be converted into “process windows” on the actual manufacturing processes.

Optimization

Whether the manufacturing plant is located next door or far away in another country, and whether the plant is owned by your company or by an external supplier,to make the product easy to manufacture the design team must deliver the right “recipe” to the manufacturer (optimized specs, optimized manufacturing settings, etc.). If this “tolerancing” phase is not properly carried out, manufacturing engineers will have to resort to their own creativity to solve mismatches and adjust product settings. Obviously, this is not the best option since it involves tampering with the product characteristics and would have an adverse impact on time-to-market.

Capability Estimates

Unfortunately, all processes are affected by various sources of variation (environmental fluctuations as well as process variability), and this variability often causes major quality problems. If product specifications are large enough compared to the overall process variability, the result will be high-quality products at low cost (with a high Ppk capability value). If this is not the case, the percentage of out-of-spec products will substantially increase.

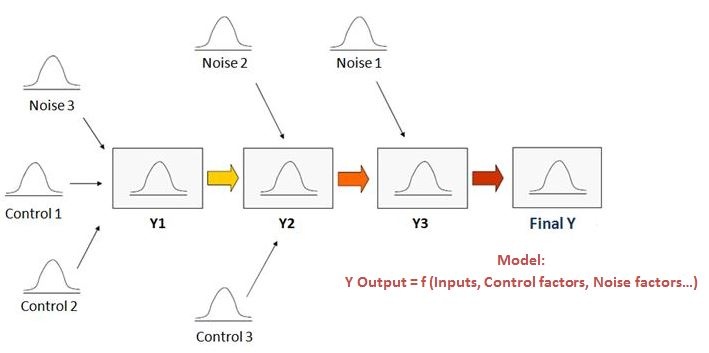

Consider the graph below. There are many inputs and only one output. Some inputs are controllable parameters, but some are uncontrollable noise factors.

At this stage, only few prototypes might be available to validate the design concept, however, models based on pilot-scale Designs of Experiments (DOE), Computer-Aided Design, or known physical models may enable you to study the way in which input variability will get propagated to the final output, and from that you can predict the capability values that can be expected when full production will be launched.

Enter the Monte Carlo Simulation Method

The Monte Carlo method is a probabilistic technique based on generating a large number of random samples in order to simulate variability in a complex system. The objective is to simulate and test as early as possible so we can anticipate quality problems, avoid costly design changes that might be required at a later phase, and make life a lot easier on the shop floor.

Monte Carlo has a reputation for being difficult, but software tools have made it much easier. For example, check out Minitab Engage, our software for executing and reporting on quality improvement projects of all types. It includes a first-in-class Monte Carlo Simulation tool ideal for manufacturing engineers.

Every input in your model is characterized by a mean and a variance. Identifying the correct probabilistic distribution may require a deeper knowledge of the way inputs behave. To simplify this, the triangular distribution may be used to simply indicate the minimum, maximum and the most probable values.

Sensitivity Analysis

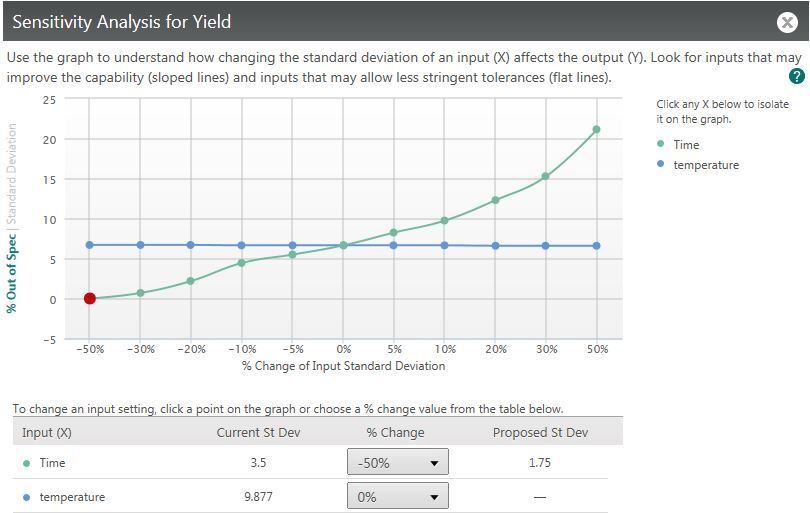

When doing a Monte Carlo simulation, if the predicted capability index is insufficient and some improvements are required to reach an acceptable quality level, the variability on some inputs will need to be reduced. Variation reduction is a very costly activity; therefore, you should really focus on the few variables that will lead to the largest gains in terms of capability improvement.

The graph above illustrates a sensitivity analysis, in which a reduction of the standard deviation of a particular input (green curve) is expected to lead to a massive reduction in the proportion of out-of-spec.

Robust Design

Some parameters that are easily controllable (control factors) in your system may interact with noise effects. This means that a noise factor effect may be modified by a controllable factor. If that is the case, such noise*control interactions may be used to mitigate noise effects and make the process or product more robust to environmental fluctuations. Nonlinear effects may also be useful to improve robustness to fluctuations.

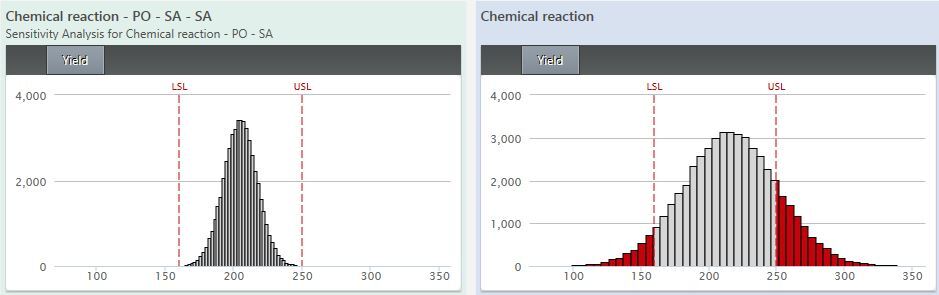

In the graph above, after optimization and following a sensitivity analysis, the variability of one input has been reduced so that the variability of the output, which was too large compared to specifications (right part of the diagram), is now well within specifications (left part).

Conclusion

This simulation procedure is iterative:

- Design with nominal values.

- Simulate variability to predict capability indices.

- Analyze sensitivity.

- Redesign or re-center until the system meets all requirements.

Monte Carlo simulations are often a crucial part of DFSS (Design for Six Sigma) or DMADV (Define Measure Analyze Design Verify) projects. Innovation activities play a vital role as economies become more advanced and more dynamic. As we move into the innovation-driven stage, this approach based on simulations will become even more important.

Monte Carlo simulation used to involve high computational costs in the past, but this is no longer the case today with the availability of very powerful calculating tools.