An exciting new study sheds light on the relationship between P values and the replication of experimental results. This study highlights issues that I've emphasized repeatedly—it is crucial to interpret P values correctly, and significant results must be replicated to be trustworthy.

The study also supports my disagreement with the decision by the Journal of Basic and Applied Social Psychology to ban P values and confidence intervals. About six months ago, I laid out my case that P values and confidence intervals provide important information.

The authors of the August 2015 study, Estimating the reproducibility of psychological science, set out to assess the rate and predictors of reproducibility in the field of psychology. Unfortunately, there is a shortage of replication studies available for this study to analyze. The shortage exists because, sadly, it’s generally easier for authors to publish the results of new studies than replicate studies.

To get the reproducibility study off the ground, the group of 300 researchers associated with the project had to conduct their own replication studies first! These researchers conducted replications of 100 psychology studies that had already obtained statistically significant results and had been accepted for publication by three respected psychology journals.

Overall, the study found that only 36% of the replication studies were themselves statistically significant. This low rate reaffirms the importance of replicating the results before accepting a finding as being experimentally established!

Scientific progress is not neat and tidy. After all, we’re trying to model a complex reality using samples. False positives and negatives are an inherent part of the process. These issues are why I oppose the "one and done" approach of accepting a single significant study as the truth. Replication studies are as important as the original study.

The study also assessed whether various factors can predict the likelihood that a replication study will be statistically significant. The authors looked at factors such as the characteristics of the investigators, hypotheses, analytical methods, as well as indicators of the strength of the original evidence, such as the P value.

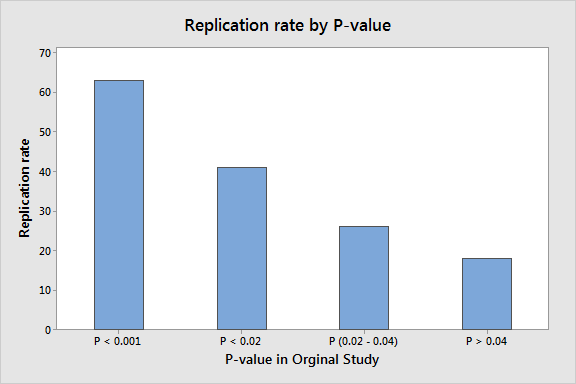

Most factors did not predict reproducibility. However, the study found that the P value did a pretty good job! The graph shows how lower P values in the original studies are associated with a higher rate of statistically significant results in the follow-up studies.

Right now it’s not looking like such a good idea to ban P values! Clearly, P values provide important information about which studies warrant more skepticism.

The study results are consistent with what I wrote in Five Guidelines for Using P Values:

- The exact P value matters—not just whether a result is significant or not.

- A P value near 0.05 isn’t worth much by itself.

- Replication is crucial.

It’s important to note that while the replication rate in psychology is probably different than other fields of study, the general principles should apply elsewhere.