Kappa statistics are commonly used to indicate the degree of agreement of nominal assessments made by multiple appraisers. They are typically used for visual inspection to identify defects. Another example might be inspectors rating defects on TV sets: Do they consistently agree on their classifications of scratches, low picture quality, poor sound? Another application could be patients examined by different doctors for a particular disease: How often will the doctors' diagnoses of the condition agree?

A Kappa study will enable you to understand whether an appraiser is consistent with himself (within-appraiser agreement), coherent with his colleagues (inter-appraiser agreement) or with a reference value (standard) provided by an expert. If the kappa value is poor, it probably means that some additional training is required.The higher the kappa value, the stronger the degree of agreement.

When:

- Kappa = 1, perfect agreement exists.

- Kappa < 0, agreement is weaker than expected by chance; this rarely happens.

- Kappa close to 0, the degree of agreement is the same as would be expected by chance.

But what is exactly the meaning of a Kappa value of 0?

Remember your years at school? Suppose that you are expected to go through a very difficult examination (with multiple choice questions) and that for this very particular subject, you had, unfortunately, no time at all to review any course material due to a lack of time, family constraints and other very understandable and valid reasons. Suppose that this exam gave you five possible choices and only one correct answer for every question.

If you tick randomly to select one choice per question, you might end up having 20% correct answers, by chance only. Not bad after all, considering the minimal amount of effort involved, but in this case, a 20% agreement with the correct answers would result in a…kappa score of 0.

Kappa Measure in Attribute Agreement Analysis

In an attribute agreement analysis, the kappa measure takes into account the agreement occurring by chance only.

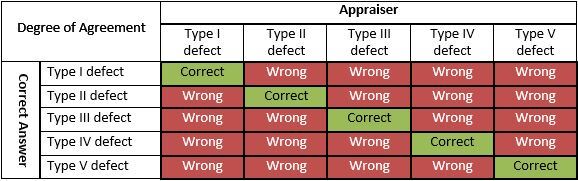

The table below shows the odds of by-chance agreement between correct answer and appraiser assessment:

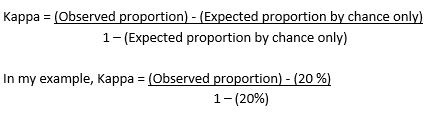

To estimate the Kappa value, we need to compare the observed proportion of correct answers to the expected proportion of correct answers (based on chance only):

Kappas can be used only with binary or nominal-scale ratings, they are not really relevant for ordered-categorical ratings (for example "good," "fair," "poor").

Kappas are not only restricted to visual inspection in a manufacturing environment. A call center might use this approach to rate the way incoming calls are dealt with, or a tech support service might use it to rate the answers provided by employees. In an hospital this approach could be used to rate the adequacy of health procedures implemented for different types of situations or different symptoms.

Where could you use Kappa studies?