Can you trust your data?

That's the very first question we need to ask when we perform a statistical analysis. If the data's no good, it doesn't matter what statistical methods we employ, nor how much expertise we have in analyzing data. If we start with bad data, we'll end up with unreliable results. Garbage in, garbage out, as they say.So, can you trust your data? Are you positive? Because, let's admit it, many of us forget to ask that question altogether, or respond too quickly and confidently.

You can’t just assume we have good data—you need to know you do. That may require a little bit more work up front, but the energy you spend getting good data will pay off in the form of better decisions and bigger improvements.

Here are 3 critical actions you can take to maximize your chance of getting data that will lead to correct conclusions.

1: Plan How, When, and What to Measure—and Who Will Do It

Failing to plan is a great way to get unreliable data. That’s because a solid plan is the key to successful data collection. Asking why you’re gathering data at the very start of a project will help you pinpoint the data you really need. A data collection plan should clarify:

- What data will be collected

- Who will collect it

- When it will be collected

- Where it will be collected

- How it will be collected

Answering these questions in advance will put you well on your way to getting meaningful data.

2: Test Your Measurement System

Many quality improvement projects require measurement data for factors like weight, diameter, or length and width. Not verifying the accuracy of your measurements practically guarantees that your data—and thus your results—are not reliable.

A branch of statistics called Measurement System Analysis lets you quickly assess and improve your measurement system so you can be sure you’re collecting data that is accurate and precise.

When gathering quantitative data, Gage Repeatability and Reproducibility (R&R) analysis confirms that instruments and operators are measuring parts consistently.

If you’re grading parts or identifying defects, an Attribute Agreement Analysis verifies that different

evaluators are making judgments consistent with each other and with established standards.

If you do not examine your measurement system, you’re much more likely to add variation and

inconsistency to your data that can wind up clouding your analysis.

3: Beware of Confounding or Lurking Variables

As you collect data, be careful to avoid introducing unintended and unaccounted-for variables. These “lurking” variables can make even the most carefully collected data unreliable—and such hidden factors often are insidiously difficult to detect.

A well-known example involves World War II-era bombing runs. Analysis showed that accuracy increased when bombers encountered enemy fighters, confounding all expectations. But a key variable hadn’t been factored in: weather conditions. On cloudy days, accuracy was terrible

because the bombers couldn’t spot landmarks, and the enemy didn’t bother scrambling fighters.

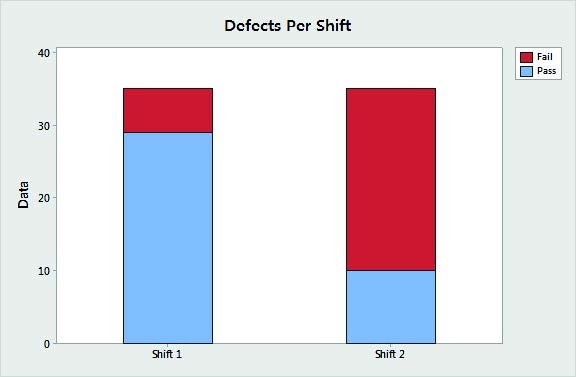

Suppose that data for your company’s key product shows a much larger defect rate for items made by the second shift than items made by the first.

Given only this information, your boss might suggest a training program for the second shift, or perhaps even more drastic action.

But could something else be going on? Your raw materials come from three different suppliers.

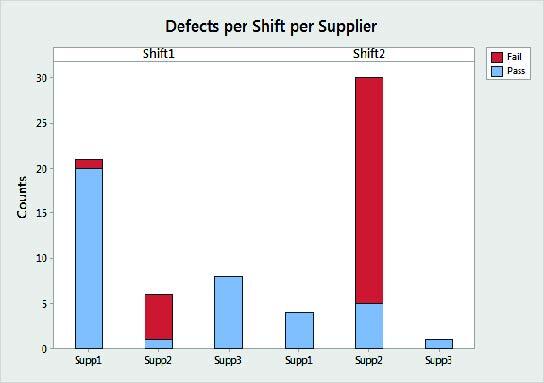

What does the defect rate data look like if you include the supplier along with the shift?

Now you can see that defect rates for both shifts are higher when using supplier 2’s materials. Not

accounting for this confounding factor almost led to an expensive “solution” that probably would do little to reduce the overall defect rate.

Take the Time to Get Data You Can Trust…

Nobody sets out to waste time or sabotage their efforts by not collecting good data. But it’s all too easy to get problem data even when you’re being careful! When you collect data, be sure to spend

the little bit of time it takes to make sure your data is truly trustworthy.