Previously, I discussed how business problems arise when people have conflicting opinions about a subjective factor, such as whether something is the right color or not, or whether a job applicant is qualified for a position. The key to resolving such honest disagreements and handling future decisions more consistently is a statistical tool called attribute agreement analysis. In this post, we'll cover how to set up and conduct an attribute agreement analysis.

Does This Applicant Qualify, or Not?

A busy loan office for a major financial institution processed many applications each day. A team of four reviewers inspected each application and categorized it as Approved, in which case it went on to a loan officer for further handling, or Rejected, in which case the applicant received a polite note declining to fulfill the request.

The loan officers began noticing inconsistency in approved applications, so the bank decided to conduct an attribute agreement analysis on the application reviewers.

Two outcomes were possible:

1. The reviewers make the right choice most of the time. If this is the case, loan officers can be confident that the reviewers do a good job, rejecting risky applicants and approving applicants with potential to be good borrowers.

2. The reviewers too often choose incorrectly. In this case, the loan officers might not be focusing their time on the best applications, and some people who may be qualified may be rejected incorrectly.

One particularly useful thing about an attribute agreement analysis: even if reviewers make the wrong choice too often, the results will indicate where the reviewers make mistakes. The bank can then use that information to help improve the reviewers' performance.

The Basic Structure of an Attribute Agreement Analysis

A typical attribute agreement analysis asks individual appraisers to evaluate multiple samples, which have been selected to reflect the range of variation they are likely to observe. The appraisers review each sample item several times each, so the analysis reveals how not only how well individual appraisers agree with each other, but also howl consistently each appraiser evaluates the same item.

For this study, the loan officers selected 30 applications, half of which the officers agreed should receive approval and half which should be rejected. These included both obvious and borderline applications.

Next, each of the four reviewers was asked to approve or reject the 30 applications two times. These evaluation sessions took place one week apart, to make it less likely they would remember how they'd classified them the first time. The applications were randomly ordered each time.

The reviewers did not know how the applications had been rated by the loan officers. In addition, they were asked not to talk about the applications until after the analysis was complete, to avoid biasing one another.

Using Software to Set Up the Attribute Agreement Analysis

You don't need to use software to perform an Attribute Agreement Analysis, but a program like Minitab does make it easier both to plan the study and gather the data, as well as to analyze the data after you have it. There are two ways to set up your study in Minitab.

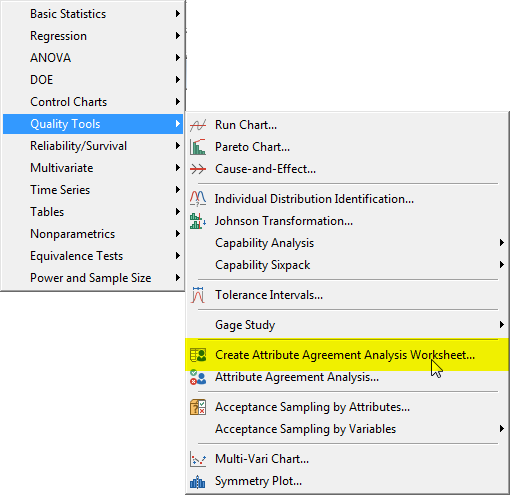

The first way is to go to Stat > Quality Tools > Create Attribute Agreement Analysis Worksheet... as shown here:

This option calls up an easy-to-follow dialog box that will set up your study, randomize the order of reviewer evaluations, and permit you to print out data collection forms for each evaluation session.

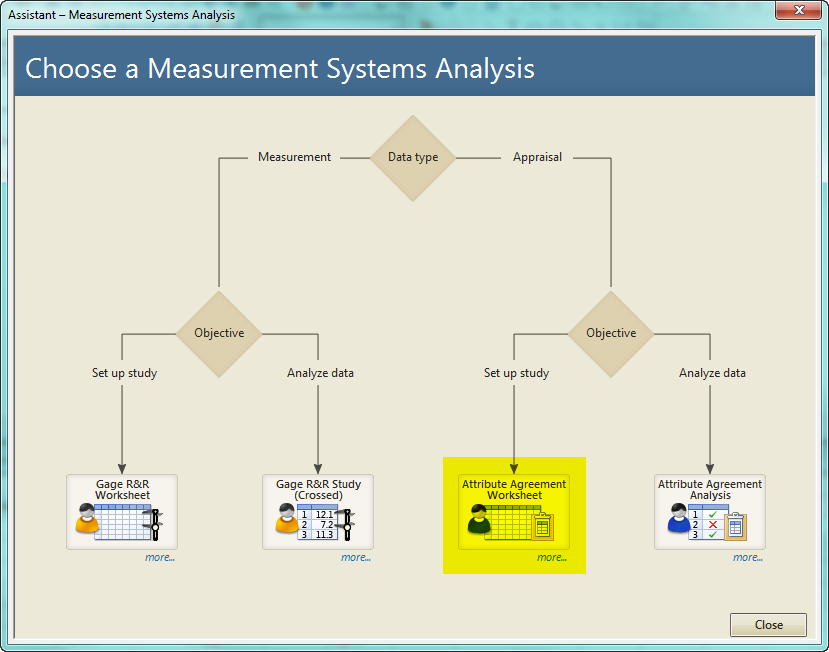

But it's even easier to use Minitab's Assistant. In the menu, select Assistant > Measurement Systems Analysis..., then click the Attribute Agreement Worksheet button:

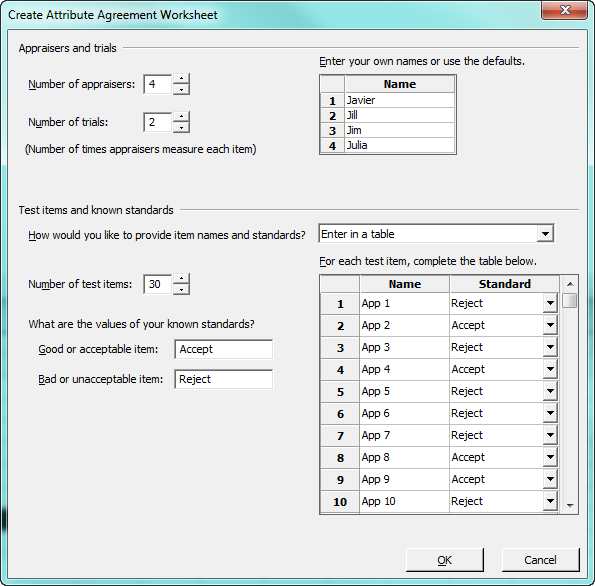

That brings up the following dialog box, which walks you through setting up your worksheet and printing out data collection forms, if desired. For this analysis, the Assistant dialog box is filled out as shown here:

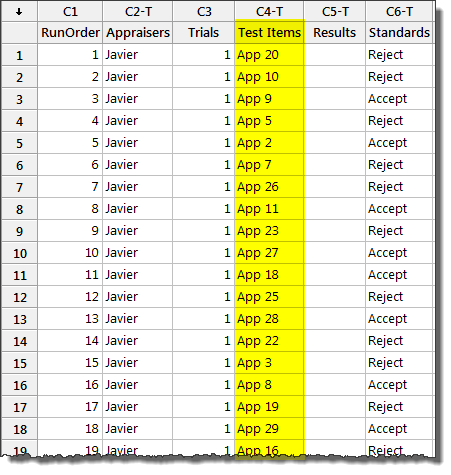

After you press OK, Minitab creates a worksheet for you and gives you the option to print out data collection forms for each reviewer and each trial. As you can see in the "Test Items" column below, Minitab randomizes the order of the observed items in each trial automatically, and the worksheet is arranged so you need only enter the reviewers' judgments in the the "Results" column.

In my next post, we'll analyze the data collected in this attribute agreement analysis.